In an age where Artificial Intelligence (AI) is starting to feel like a buddy we rely on daily, it is easy to forget that AI has flaws and that it is not our friend. The European Disability Forum’s 6 November 2023 workshop for members on AI in public and private services served as a much-needed reality check, mixing optimism with caution as we explored AI’s role in the lives of people with disabilities.

Maureen Piggot, member of the EDF Executive Committee, gave an opening speech. She explained the need for inclusive design methodology, diverse data sets and the inclusion of persons with disabilities in the AI community.

The Dutch Childcare Benefit Scandal – A Warning for AI in Social Security

Kave Noori, AI Policy Officer at EDF, presented the Dutch childcare benefit scandal as a case study for AI in social security. He described it as the biggest AI scandal in Europe to date. The scandal involved the use of AI systems to detect fraud, which led to discrimination against people based on their nationality. Many families who were legally entitled to the benefit were falsely accused of fraud and suffered serious consequences, including financial ruin, loss of employment, loss of custody of their children and, sadly, some committed suicide.

Kave emphasised how important it is for the disability rights movement to study this case as people with disabilities can be affected in similar ways. Kave also pointed out that Amnesty International has written a report on the Dutch scandal and that a panel of legal experts from the Council of Europe concluded in a report that the same thing could happen in any country in Europe.

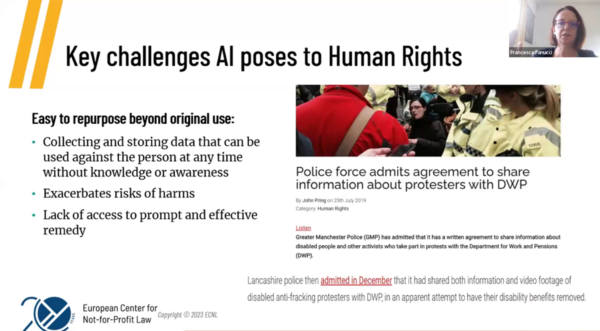

AI and Human Rights: The Urgent Need for Transparency and Accountability

Francesca Fanucci, Senior Legal Advisor at the European Centre for Not-For-Profit Law, discussed the need for AI to respect human rights. She emphasised the importance of examining AI’s impact on civil, political, economic, socio-cultural, and humanitarian rights. Francesca also recognised the potential for discrimination through bias in data or algorithm development.

Francesca discussed a case in Manchester (UK) where the police shared CCTV footage (closed-circuit television also known as video surveillance) of an environmental demonstration with the authority that decides on benefits for persons with disabilities. The police explained that the CCTV cameras were used to maintain public order and to ensure that no people were harmed. It was only later revealed that the police were using facial recognition software on the cameras and that the footage had been shared with the Department for Work and Pensions (DWP). The DWP matched footage of the protesters with a list of people receiving disability benefits. Some had their benefits cancelled and were charged with fraud. Francesca emphasised the need for transparency and accountability when the government uses AI. She suggested that one solution is to require governments to carry out a fundamental rights impact assessment before using AI systems.

Inclusive Healthcare in the Age of AI: Opportunities and Obstacles

Janneke van Oirschot, Research Officer at Health Action International, spoke about how AI in healthcare can be helpful but also risky for persons with disabilities. AI can help personalise healthcare, monitor patients’ health and help doctors make better decisions. However, there are concerns about data privacy, the way AI makes decisions and the challenges of using AI in healthcare. Janneke spoke about different ways AI can be used in healthcare. For example, to decide which patients need help first; diagnose diseases; help with treatment decisions; use robots in surgery; and monitor patients. She warned that people are too enthusiastic about AI changing healthcare and that there are risks for some patients that we need to consider, such as being treated unfairly and having difficulty getting care. Janneke said that people with disabilities can be helped by AI in healthcare, but they can also be excluded due to poor data, insufficient research and inadequate planning.

Facial Recognition Fails People with Down’s Syndrome

Gemma Galdon Clavell, CEO of ETICAS, a non-profit organisation that tests algorithms, presented a report on the impact of facial recognition on people with Down’s syndrome. She explained that AI systems are trained on historical data and can be useful in many situations, such as navigating with a navigation system. However, when ETICAS tested a facial recognition system used by a Swiss insurance company, it found large inaccuracies in age prediction for people with Down syndrome. Men were estimated to be much older and women much younger than their actual age. Some were even categorised as being under five years old. This can have a major impact on access to services, as adults with Down’s syndrome can be denied access if the system categorises them as underage. Gemma called for the algorithms to be publicly scrutinised and for the disability community to be involved in the public debate on technology. She also encouraged people to join ETICAS’ ‘AI does not work for me’ campaign.